Think about how you read. Do you say every word out loud to yourself in your head?

That’s a process called internal vocalization or subvocalization, and when you say words to yourself in your head, there are tiny movements of the muscles around your vocal chords and larynx. People have been fascinated by the phenomenon, also called “silent speech,” for decades, primarily with how to stop doing it as way to read faster. But internal vocalization has a new application that could change the way we interact with computers.

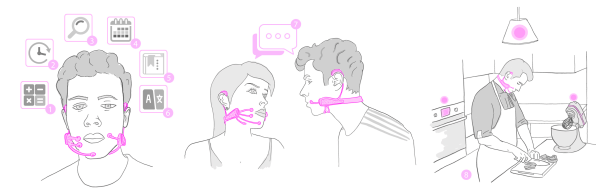

[Photo: Lorrie LeJeune/MIT]Researchers at the MIT Media Lab have created a prototype for a device you wear on your face that can detect tiny shifts that occur when you subvocalize in the muscles that help you speak. That means that you can subvocalize a word, the wearable can detect it, and translate it into a meaningful command for a computer. Then, the computer connected to the wearable can perform a task for you, and communicate back to you through bone conduction.

[Photo: Lorrie LeJeune/MIT]Researchers at the MIT Media Lab have created a prototype for a device you wear on your face that can detect tiny shifts that occur when you subvocalize in the muscles that help you speak. That means that you can subvocalize a word, the wearable can detect it, and translate it into a meaningful command for a computer. Then, the computer connected to the wearable can perform a task for you, and communicate back to you through bone conduction.

What does that mean? Basically, you could think a mathematical expression like 1,567 + 437 and the computer could tell you the answer (2,004) by conducting sound waves through your skull.

The device and corresponding technological platform is called AlterEgo, and is a prototype for how artificially intelligent machines might communicate with us in the future. But the researchers are focused on a particular school of thinking around AI that emphasizes how AI can be built to augment human capacity, rather than replace people. “We thought it was important to work on an alternative vision, where basically people can make very easy and seamless use of all this computational intelligence,” says Pattie Maes, professor of media technology and head of the Media Lab’s Fluid Interfaces group. “They don’t need to compete, they can collaborate with AIs in a seamless way.”

The researchers are very determined to point out that AlterEgo is not the same as a brain-computer interface–a not-yet-possible technology in which a computer can directly read someone’s thoughts. In fact, AlterEgo was deliberately designed to not read its user’s mind. “We believe that it’s absolutely important that an everyday interface does not invade a user’s private thoughts,” says Arnav Kapur, a PhD student in the Fluid Interfaces group. “It doesn’t have any physical access to the user’s brain activity. We think a person should have absolute control over what information to convey to a person or a computer.”

Using internal vocalization as a way of giving people a private, natural way of communicating with a computer that doesn’t require them to speak at all is a clever idea that has no precedent in human-computer interaction research. Kapur, who says he learned about internal vocalization while watching YouTube videos about how to speed read, tested the idea by placing electrodes in different places on test subjects’ faces and throats (his brother was his first subject). Then, he could measure neuromuscular signals as people subvocalized words like “yes” and “no.” Over time, Kapur was able to find low-amplitude, low-frequency signatures that corresponded to different subvocalized words. The next step was to train a neural network to differentiate between signatures so the computer could accurately determine which word someone was vocalizing.

But Kapur wasn’t just interested in a computer being able to hear what you say inside your head–he also wanted it to be able to communicate back to you. This is called a closed-loop interface, where the computer acts almost like a confidant in your ear. By using bone conduction audio, which vibrates against your bone and enables you to hear audio without having an headphone inside your ear, Kapur created a wearable that could detect your silent speech and then talk back to you.

[Image: Arnav Kapur/Neo Mohsenvand/courtesy MIT Media Lab]The next step was to see how the technology could be applied. Kapur started by building an arithmetic application, training the neural network to recognize digits one through nine and a series of operations like addition and multiplication. He built an application that enabled the wearer to ask basic questions of Google, like what the weather is tomorrow, what time it is, or even where is a particular restaurant.

[Image: Arnav Kapur/Neo Mohsenvand/courtesy MIT Media Lab]The next step was to see how the technology could be applied. Kapur started by building an arithmetic application, training the neural network to recognize digits one through nine and a series of operations like addition and multiplication. He built an application that enabled the wearer to ask basic questions of Google, like what the weather is tomorrow, what time it is, or even where is a particular restaurant.

Kapur also wondered if AlterEgo could enable an AI to sit in your ear and aid in decision making. Inspired by Google’s AlphaGo AI, which beat the human Go champion in May 2017, Kapur built another application that could advise a human player where to move next in games of Go or chess. After narrating their opponent’s move to the algorithm in their ear, the human player could ask for advice on what to do next, or move on their own–if they were able to make a stupid move, AlterEgo could let them know. “It was a metaphor for how in the future, through AlterEgo, you could have an AI system on you as a second self and augment human decision making,” Kapur says.

So far, AlterEgo has 92% accuracy in detecting the words someone says to themselves, within the limited vocabulary that Kapur has trained the system on. And it only works for one person at a time–the system has to be trained on how every new user subvocalizes for about 10 or 15 minutes before it will work.

Despite these limits, there’s a wealth of potential research opportunities for AlterEgo. Maes says that the team has received many requests since the project was published in March as to how AlterEgo could help people with speech impediments, diseases like ALS that make speech difficult, and those who’ve lost their voice. Kapur is also interested in exploring whether the platform could be used to augment memory. For instance, he envisions subvocalizing a list to AlterEgo, or a person’s name, and then being able to recall that information at a later date. That could be useful for those of us who tend to forget names, as well as people who are losing their memory due to conditions like dementia and Alzheimer’s.

[Photo: MIT Media Lab]These are long-term research goals. In the immediate-term, Kapur hopes to expand AlterEgo’s vocabulary so that it can understand more subvocalized words. With a larger vocabulary list, the platform could be tested in real-world settings and perhaps opened up to other developers. Another key area for improvement is what the device looks like. Right now, it looks like a minimalistic version of headgear, the kind you got in eighth grade to straighten your teeth–not ideal for everyday wear. So the team is looking into testing new types of materials that could detect the electro-neuromuscular signals but are invisible enough to make wearing AlterEgo socially acceptable.

[Photo: MIT Media Lab]These are long-term research goals. In the immediate-term, Kapur hopes to expand AlterEgo’s vocabulary so that it can understand more subvocalized words. With a larger vocabulary list, the platform could be tested in real-world settings and perhaps opened up to other developers. Another key area for improvement is what the device looks like. Right now, it looks like a minimalistic version of headgear, the kind you got in eighth grade to straighten your teeth–not ideal for everyday wear. So the team is looking into testing new types of materials that could detect the electro-neuromuscular signals but are invisible enough to make wearing AlterEgo socially acceptable.

But there are challenges ahead–primarily, a lack of data. Compared to the amount of data that could be used to train speech recognition algorithms that’s just available online, there’s nothing on subvocalization. That means the team has to gather all of it themselves, at least for the time being.

Still, AlterEgo’s implications are exciting. The technology would enable a new way of thinking about how we interact with computers, one that doesn’t require a screen but that still preserves the privacy of our thoughts.

“Traditionally, I think computers are generally considered as external tools,” Kapur says. “Could we have a complementary bridge between humans and computers, and build a system that could actually enable us to avail the advantage of computers?”

No comments:

Post a Comment